I decided to play around with some of the various settings in the neural network to see if I could get better accuracy than with linear regression. Well I stumbled across this MLPClassifier class in scikit… once again, easy peasy just what you want. I am starting to really like these guys.

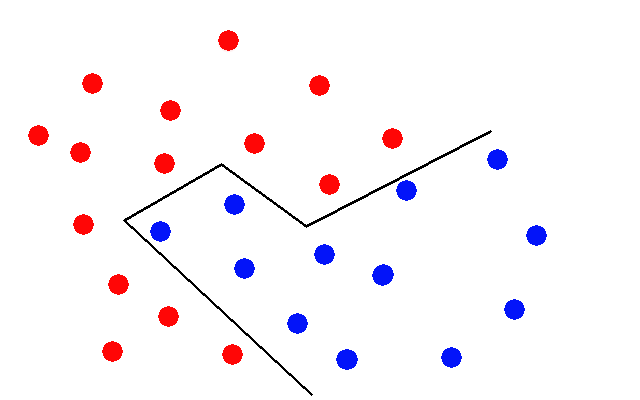

A good place to start seemed like the size of the hidden layer, since I get the sense that’s a pretty critical parameter. The conventional wisdom seems to be that it should be somewhere between the size of the inputs and the size of the outputs. So for this project, somewhere between 1 and 1024. Right now it’s at 256. Sometimes people say to make it smaller, sometimes people say to make it bigger. Who knows? So I tried making it smaller (128), and I got a lot of this business:

warnings.warn(

C:\Users\John\AppData\Local\Programs\Python\Python38\lib\site-packages\sklearn\neural_network\_multilayer_perceptron.py:582: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (200) reached and the optimization hasn't converged yet.That doesn’t sound like good news. So I tried making it 512… still the same warnings. So I tried 1024 and the warnings went away!

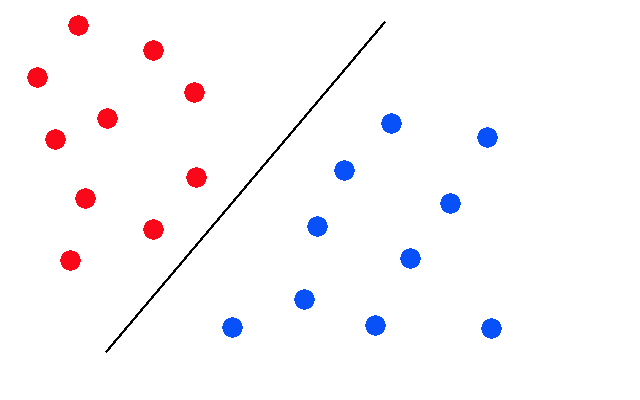

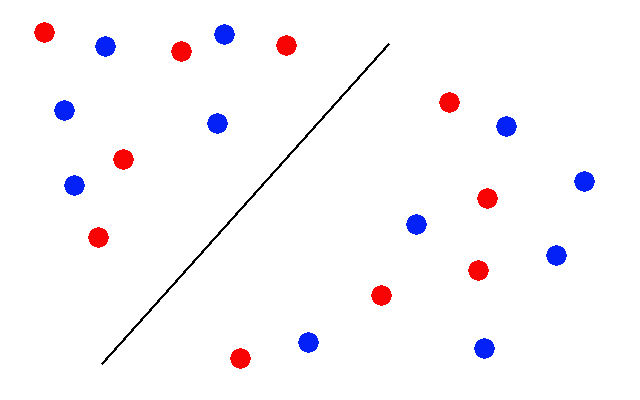

The results really weren’t what I was looking for, though. I would describe them as “overconfident”. As in, the probabilities were always either 99% or 1% for each mood. It started to look like the linear regression did before we discovered that predict_proba() function. Not very interesting! I want something more nuanced.

That’s when I discovered yet another nifty feature of scikit, thanks to this dude – the GridSearchCV function. This thing will chop up my dataset in various ways and hold a contest between all the different variations of parameters, all with one function call:

# read data

train = pd.read_csv('elmo/train_' + mood + '.csv')

# load elmo_train_new

pickle_in = open('elmo/elmo_train_' + mood + '.pickle', 'rb')

elmo_train_new = pickle.load(pickle_in)

param_grid = [

{

'activation' : ['identity', 'logistic', 'tanh', 'relu'],

'solver' : ['lbfgs', 'sgd', 'adam'],

'hidden_layer_sizes': [

(128,),(256,),(512,),(1024,)

]

}]

# create grid search

grid_search = GridSearchCV(MLPClassifier(), param_grid, scoring='accuracy')

grid_search.fit(elmo_train_new, train['label'])

# print best parameters

print('best parameters for ' + mood + ':')

print(str(grid_search.best_params_))I don’t even know what any of this stuff means yet, and scikit is just going to find the best ones for me!

Unfortunately, this process is going to take something on the order of 18 hours, as far as I can tell… it’s already been running for about five and a half, and it’s only finished four of the moods. Here’s what the choices look like so far:

best parameters for grateful:

{'activation': 'identity', 'hidden_layer_sizes': (128,), 'solver': 'sgd'}

best parameters for happy:

{'activation': 'relu', 'hidden_layer_sizes': (128,), 'solver': 'adam'}

best parameters for hopeful:

{'activation': 'relu', 'hidden_layer_sizes': (1024,), 'solver': 'adam'}

best parameters for determined:

{'activation': 'relu', 'hidden_layer_sizes': (1024,), 'solver': 'adam'}Interestingly, but obvious in hindsight – we can configure the networks differently for each mood! This makes sense since the dataset is so small, and some moods are present much more frequently than others. For some of the moods that don’t appear very often, there may really be no optimal solution.

Anyway, since I have something like 12 hours to kill, I need to find something else to do! And I think I have settled on a new project. I have this desktop Java program that I wrote for myself that I call “Ledger”, it is basically an electronic checkbook. I actually started this blog thinking that rewriting it for the web would be my first project, and I was going to use Java/Spring on the back end and Bootstrap & JQuery on the front end.

Well that became a rather frustrating endeavor, because the grids would jump around all over the place whenever I tried to do anything – because there was always a round trip to the server, and everything had to redraw itself afterwards. This really needs to be a single page application! And now that I am more familiar with React and Django, I think we have a better stack all around. We can take advantage of Django’s automatic database persistence and maybe not have to maintain all the classes that look just like database tables.

So, I’ll be jumping back and forth between these two projects as time permits. Hopefully that will not make the blog too confusing.